In the previous post, we created a custom Augraphy pipeline to model one type of document, in that case producing output that resembled scans of real receipts. There, we just used an operational definition of our goal, a description of documents we hypothetically had in our possession. We never sat down with some actual documents and tried to replicate them synthetically. We’ll do that now.

In this post, we replicate some images from the NoisyOffice dataset, purely using Augraphy augmentations.

Note: We won’t focus on the specifics of constructing a pipeline here; for that, you should check the previous post.

By the end of this tutorial, you’ll be able to use Augraphy to reproduce your own noisy documents; from there, you can train a neural network on the results, and use it to automatically clean up your scans.

Getting Started

As usual, the work for this post is available in a Google Colab notebook, so you can follow along there if you’d prefer not to clone the repository and work locally.

Let’s download the NoisyOffice set and take a look at some of the pictures we’ll try to replicate. The images we want are in theNoisyOffice/RealNoisyOffice directory within the real_noisy_images_grayscale _doubleresolution subdirectory.

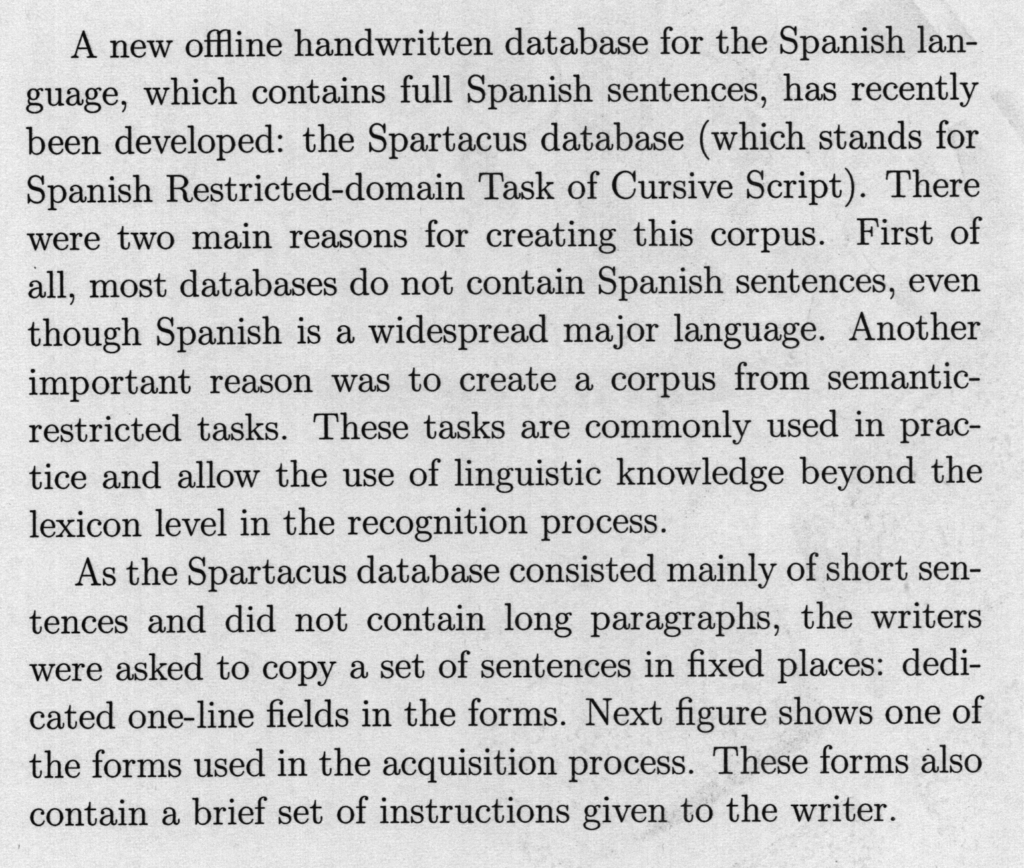

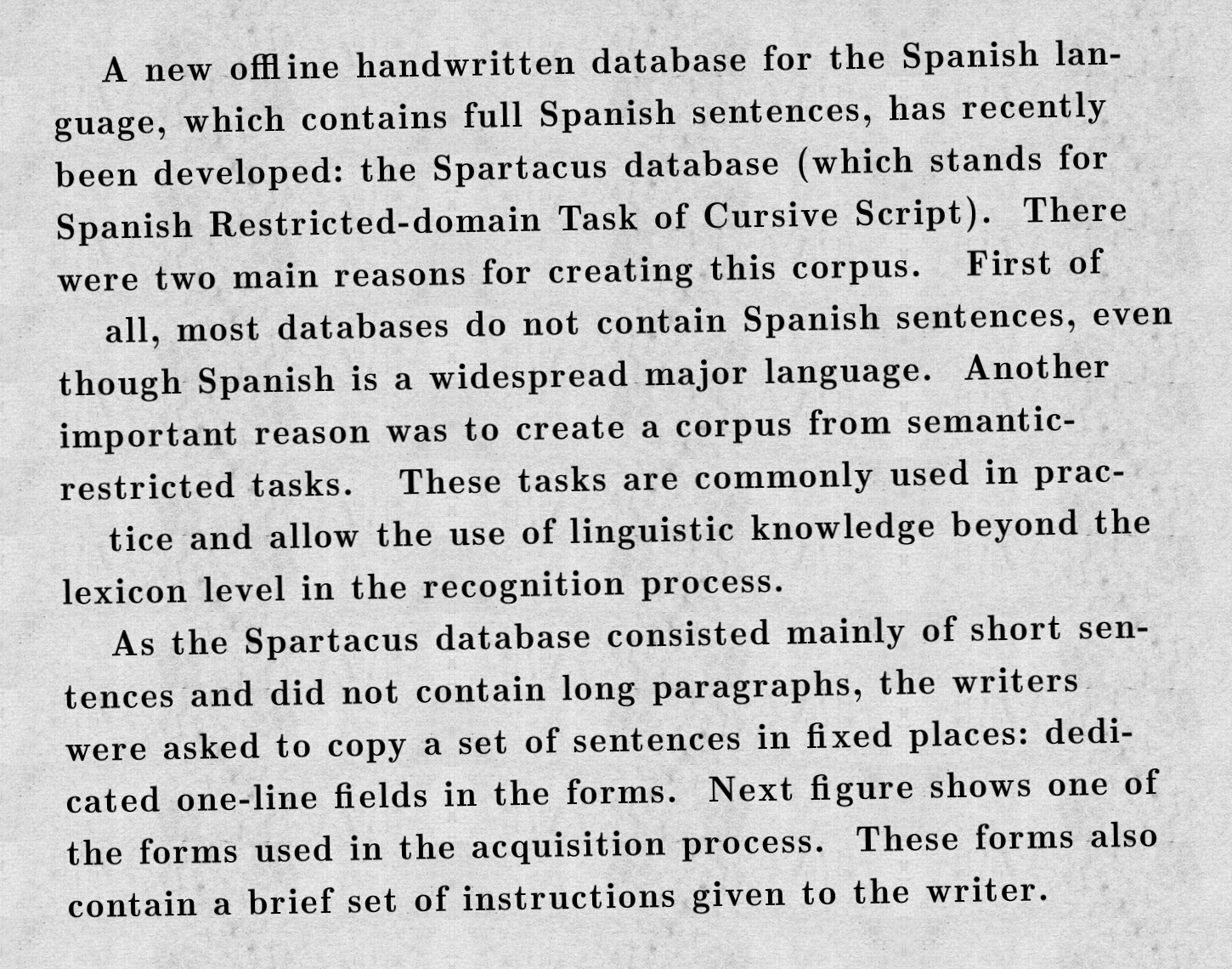

Here’s a representative:

Identifying Augmentations

To start, we need to examine our sample image and determine how it differs from a “clean” document, fresh from the office printer.

In the image above, we can see some texture in the underlying paper, including some minor staining and shadows. The background is clearly darker than a normal white page, so we’ll need to adjust the brightness. The text has also been subtly rotated by a few degrees counterclockwise. Importantly, it doesn’t look like the text itself has been visibly damaged or distorted, so we won’t need to alter the ink layer.

Augraphy already includes a few augmentations for producing paper texture, one of which is NoiseTexturize. We’ll also use the Brightness augmentation to darken the underlying paper. The stains are a little more difficult to reproduce but we can cheat: we have an image we’d like to reproduce, so we can steal its background!

Producing the Ground Truth

Augraphy pipelines start with an input image, called the ground truth. This image can be a screenshot of a document from your favorite text editor.

NoisyOffice includes clean versions of the images, prior to printing and altering them, for use in training models. In our case, we’ll try our best to reproduce such an image, without taking the one given to us.

Selecting the Font

This is a bit of a challenge, as many fonts exist which look very similar. If you’re reproducing documents from your own business, you may be able to get the necessary information just by asking around. In this case, we can make an educated guess that the producers of NoisyOffice used a popular word processor and font, and see where we get. I’ll type the same text into LibreOffice Writer and we can modify the font as we go.

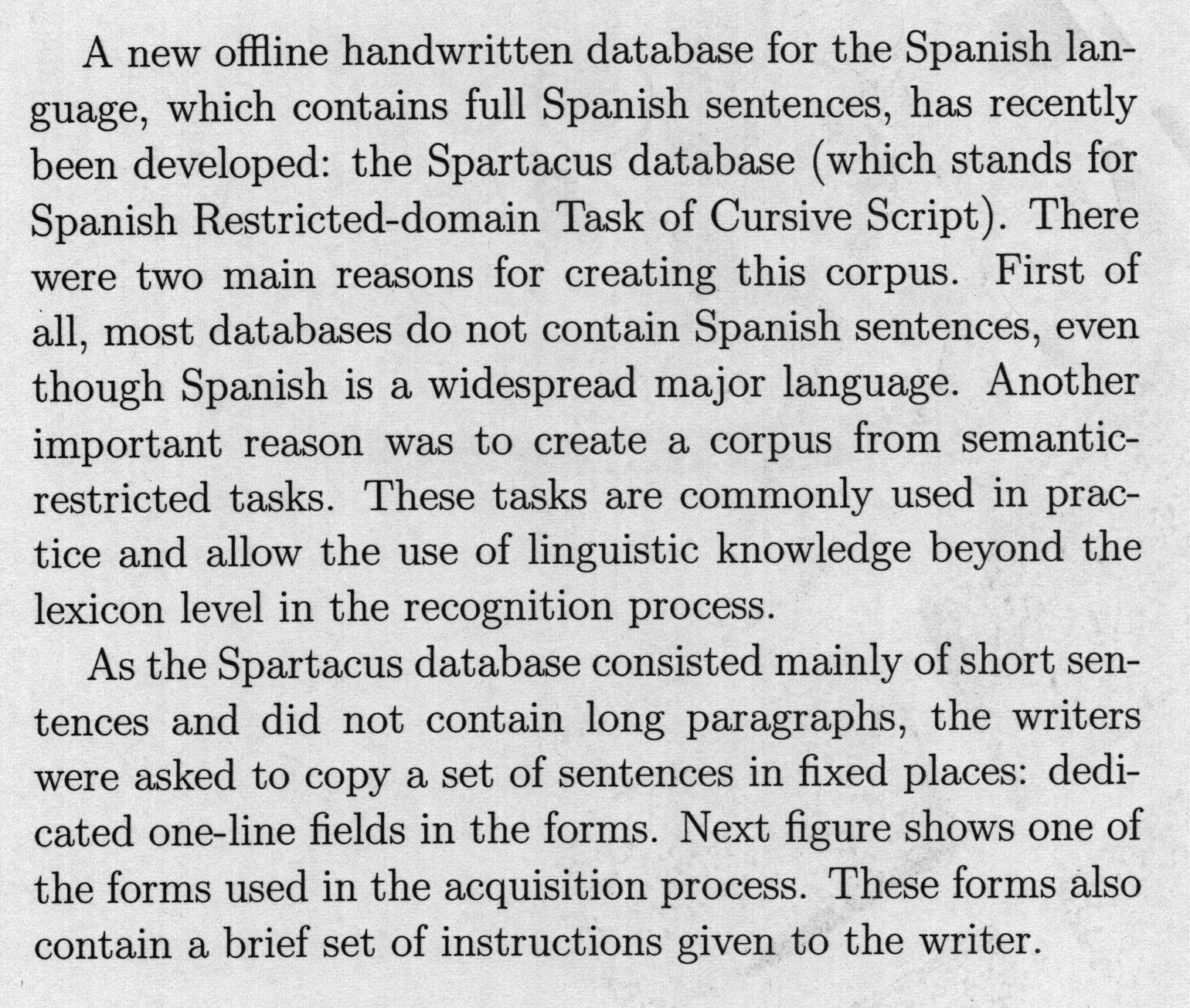

Look at the first line, in the word “database”. The lowercase a has a little tail, and the s character has little marks coming off the beginning and end of the stroke; these are called serifs. We’re using a serifed font; this narrows things down by 50%! What if we just try to set the font to Times New Roman, probably the most popular serifed font in the world? Here’s a sample of the result:

Not good enough. The difference is really noticeable in the repeated ‘f’ characters. We need something else.

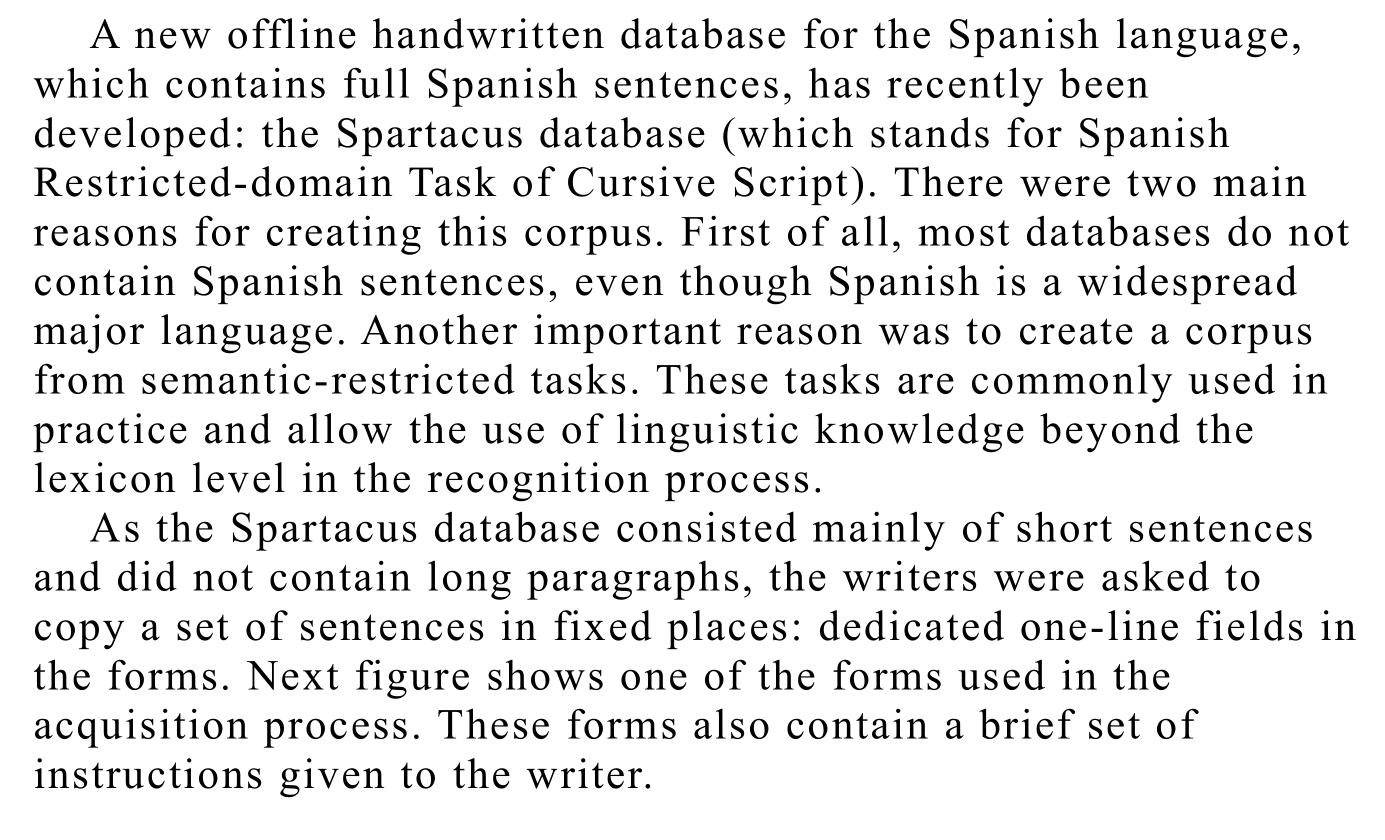

I tried a few more serifed fonts and stumbled upon one that looked very close: Latin Modern Roman.

Without contacting the authors, it’s hard to say what the original font was, but this one looks reasonably close to the sample image. We could do better, but this author is not well-versed in typography, and did not want to spend the extra time to manually test additional fonts for an even more accurate picture.

Page Style

Producing this required changing the paragraph spacing style, increasing the indentation at the beginning of paragraphs, manually hyphenating word breaks, and adjusting the page margins. You can expect to perform similar modifications when recreating your own documents.

Background

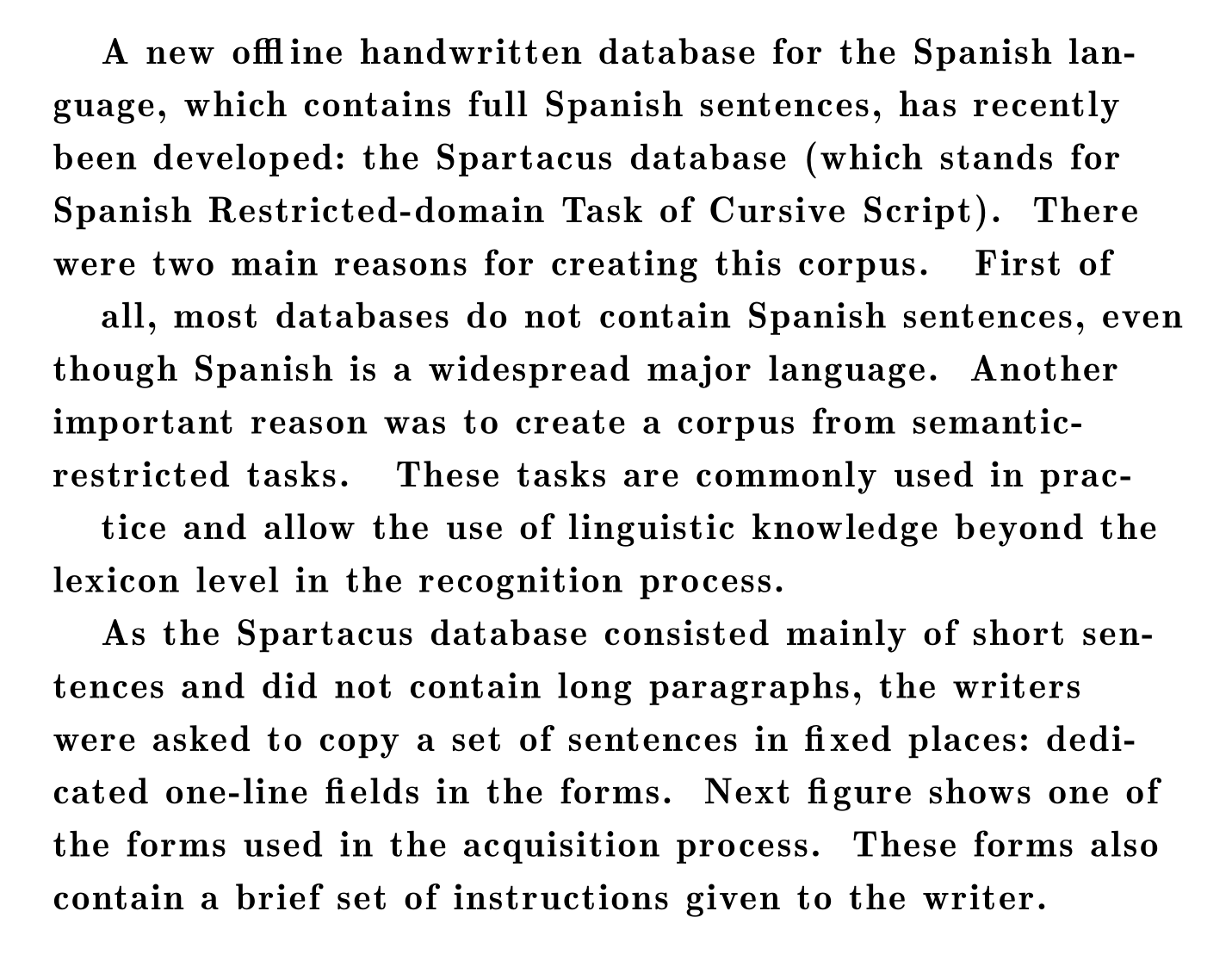

We’ll take our sample image and crop it to a section not containing text, but with some non-uniform texture. I decided to use this strip from the space between the two paragraphs:

Building the Pipeline

To modify this document, we’ll produce a simple Augraphy pipeline containing the NoiseTexturize and the Brightness augmentations along with a slight rotation, and run that pipeline over our ground truth image. We want to perform three actions:

- texturize the background to match the effect we see in the sample image,

- darken the background,

- slightly rotate the print counterclockwise, one or two degrees

The background will be generated from the cropped texture we took from the sample image, using Augraphy’s PaperFactory. This expects one or more textures available in the paper_textures directory, so we’ll move background.png there now.

Here’s how we express our needs with Augraphy:

import cv2

from augraphy import AugraphyPipeline, NoiseTexturize, Brightness

img = cv2.imread("ground_truth.png")

paper_phase = [

PaperFactory(tile_texture_shape=(90,532), p=1),

Brightness(layer="paper", range=(0.95,0.98), p=1),

NoiseTexturize(sigma_range=(15,20), turbulence_range=(2,3), p=1),

]

pipeline = AugraphyPipeline(

None,

paper_phase,

None,

rotate_range=(1,2),

)

Setting Parameters

By default, augmentations have a 50 probability of execution, which increases variability in pipeline outputs. We override this by usingp=1 inside the augmentation constructors to guarantee they get run.

We want to retain all of the detail in the texture we borrowed from the sample image, so in PaperFactory, we specify tile_texture_shape with the native dimensions of that cropped image.

The Brightness augmentation can be applied in multiple layers, so we need to tell it where it’s going to be used. It also takes a float range, which it uses to choose a brightness shift to make. We don’t want the background to get too dark, so we’ll set this to a range of 0.95 to 0.98, corresponding to a decrease in the background brightness by between 2% and 5%.

NoiseTexturize is a bit more difficult to dial in than the other two; there’s an art to this. The sigma_range parameter determines how much or how little the random noise will fluctuate, while turbulence determines the degree to which the background texture will be mutated.

We didn’t want augmentations in the ink or post layers, so we just use None for those positional arguments.

Finally, we want to rotate counterclockwise a few degrees, so we provide a rotate_range from which to sample the rotation amount.

Augmenting the Ground Truth

We now use our pipeline to augment the image, and view the result. Because Augraphy uses a probabilistic system to produce new documents, we’re not guaranteed to get exactly what we want on the first try, and this is a good thing! Not every document in real-world sets will have the same degree or type of distortions. If you’re trying to reproduce a sample image, as we’re doing now, you can expect to run the pipeline a few times before a suitable copy is generated.augmented = pipeline.augment(img)

cv2.imshow("augmented", augmented["output"])

Our pipeline produced this output on the fourth run. Not bad!

Reproducing More Documents

For brevity, we selected just one image from the NoisyOffice set, but in a real business setting you’d choose several representatives of distortions in your document library. Reproducing these additional images is a matter of adding more augmentations matching the effects present in those documents.

Because of the aforementioned probabilistic augmentation system, it’s best to produce a separate pipeline for each type of document you want to reproduce, so you can test them in isolation. Once you’ve dialed in the parameters and you’re satisfied with the outputs, you can simply combine the unique augmentations across all these pipelines into one big pipeline, and loop its augment method over your ground truth collection to produce a large set of training data.